Discuss Notion Music Composition Software here.

8 posts

Page 1 of 1

|

I explore a little rewire Notion with Cubase and I don't all understand the good way to adopt.

If I want to record a midi part of strings in cubase on a track and want to have the score in Notion recording in the same time, do I have to create 2 tracks in cubase (one midi track with my keyboard in input and EWQLSO in output and one with my keyboard in input and Notion rewire channel 1 in output) and record these 2 tracks in the same time ? I tried it works but after if I want to play the score in Notion the sound doesn't play in cubase even I change input of the midi track EWQLSO by Notion rewire channel 1 ... ?! Thanks for help Baba |

|

So I think we have to create different kind of tracks to manage rewire in bi-directional between Cubase and Notion 6.

To control Cubase instrument with score from Notion you have to create a track instrument with - input Notion rewire channel - output Instrument midi channel into Cubase To record score with a midi controller from cubase to Notion you have to create a midi track with : - input your Keyboard or another midi controller - output Notion rewire channel So it's simple but I think recording score from Cubase is a little slow and complicated because you have to record in both the 2 softwares and it seems like it's not really really reliable ... it's what I experimented. |

|

I don't know how to have Bass key notation, rewire by default put always G key ... ?!

|

cellierjerome wroteIf I want to record a midi part of strings in cubase on a track and want to have the score in Notion recording in the same time, do I have to create 2 tracks in cubase (one midi track with my keyboard in input and EWQLSO in output and one with my keyboard in input and Notion rewire channel 1 in output) and record these 2 tracks in the same time ? I tried it works but after if I want to play the score in Notion the sound doesn't play in cubase even I change input of the midi track EWQLSO by Notion rewire channel 1 ... ?! At present I do not have any versions of Cubase, but I am getting the 30-day trial version of Cubase Elements 9.5--which appears to be taking a while, since I have not received the necessary email to do the download and authorization, but so what . . . I have done a few experiments with earlier versions of Cubase (Steinberg) to determine how it does ReWire (Propellerhead Software), so I know how it works generally, as is the case with nearly all Digital Audio Workstation (DAW) applications now . . . Depending on how I read what you wrote, it's possible there is something new in Cubase; but until I do some experiments with Cubase Elements 9.5, I cannot say . . . If Cubase Elements 9.5 supports ReWire MIDI, then it's possible, but only if NOTION 6 ReWire MIDI staves are bidirectional . . . When I receive the email from Steinberg with the download information, I will do some experiments and a follow-up post . . . IMPORTANT INFORMATION FOR DOING REWIRE CORRECTLY There are a few key bits of information to understand, and it takes a while to explain them; so be patient . . . In some respects, this is vastly complex; but among other things I am software engineer, which makes it a bit easier for me to understand all this stuff, very little of which is documented clearly in one place, which is the reason I post information in this forum on how to make sense of all this stuff . . . The encouraging news is that it works and after a while it will make sense . . . (1) NOTION 6 only records the MIDI input from one MIDI input device to one staff in the score, so at most you can record notes in NOTION from one MIDI input device (typically a MIDI keyboard or MIDI workstation) onto one staff. You need to specify the MIDI input device as the MIDI Input for NOTION, and this is done in NOTION Preferences. You need to click on the staff in the NOTION score onto which you want to record the incoming MIDI from the MIDI input device. This selects the NOTION staff and tells NOTION that it is staff where incoming MIDI input is to be received. As a general rule, when NOTION is acting as a ReWire slave, activating the NOTION Record button is not done automagically when you press the Record button on the Digital Audio Workstation (DAW) application, which in this instance is Cubase. This is a problem, but there is a way to work around it, although it is cumbersome. Basically, you need to create some empty measures in the DAW project and the NOTION score, so that you can click the DAW application Record button and then switch to NOTION and click its Record button . . . (2) NOTION 6 can output MIDI from External MIDI staves to as many as 64 virtual instruments hosted in the DAW application, and the MIDI Output Ports are specified in NOTION Preferences. You need a virtual MIDI cable (or several, depending on what you are doing), and on the Mac you can create virtual MIDI cables via the "Audio MIDI Setup" application. Windows does not have a native application for doing this, but there are third-party applications that provide the necessary functionality for doing this . . . virtualMIDI (Tobias Erichsen) LoopBe1 (nerds.de) (3) NOTION 6 supports ReWire MIDI, and this is the strategy I recommend when you are using Studio One Professional 4 (PreSonus) as your DAW application, which I also recommend strongly, because both of these PreSonus products support ReWire MIDI and interact very nicely. To the best of my current knowledge, these are the only products which support ReWire MIDI this way. In contrast to NOTION External MIDI staves, which can output to as many as 64 Instrument Tracks, NOTION ReWire MIDI staves can output to as many as 256 Instrument Tracks in the DAW application, although in both strategies it is unlikely that so many virtual instruments can be hosted in the DAW application, especially if you are using a lot of effects plug-ins and "heavy" or "resource intensive" virtual instruments in the DAW application. After doing some experiments, I limit this to 12 or perhaps 16 virtual instruments at a time; and in the "ReWire MIDI Strategy" I compose music notation for this many virtual instruments and then record the generated audio in the DAW application, at which time I use the recorded audio and create a new set of 12 to 16 Instrument Tracks, retiring the previously used 12 to 16 Instrument Tracks, since now they are replaced by the recorded Audio Tracks. I do this in a sequential set of projects (".song" files in Studio One Professional 4), and for the most part I use one or two NOTION scores for this purpose, since ReWire MIDI staves in NOTION 6 have very low overhead. This is explained in detail in one of my ongoing projects in this forum, and the link is found later in this post . . . (4) It is very important to understand that in a ReWire session, audio and MIDI are separate insofar as NOTION staves and the NOTION Mixer are concerned. It also is very important to understand that in a ReWire session, the DAW application is responsible for the audio, which in simple terms means that if you want audio generated by NOTION to be heard in a ReWire session, then you need to send the audio from NOTION to the DAW application via ReWire channels that are received by Audio Tracks in the DAW application. And there also is the matter of setting the Input Monitoring buttons correctly in the DAW application . . . (5) When you send MIDI from NOTION 6 to the DAW application, you will send it to Instrument Tracks or MIDI Tracks, depending on the terminology the DAW application uses, although usually they are called "Instrument Tracks". MIDI is sent from NOTION to the DAW application via External MIDI tracks, which since they only do MIDI do not produce any audio and will show no audio activity in the NOTION Mixer, which is the expected behavior. You need to configure the DAW application to receive MIDI from the virtual MIDI cable(s) you have set in NOTION to be used for MIDI Output Ports via NOTION Preferences. Once everything is correctly configured for the External MIDI strategy, it works nicely . . . (6) If you are using Studio One Professional 4 as your DAW application, then you can use ReWire MIDI staves in the NOTION 6 project; and based on the music notation you compose, these ReWire MIDI staves will send the corresponding MIDI to Studio One Professional 4 to play native or hosted virtual instruments on the corresponding Instrument Tracks. You can use the Inspector in Studio One Professional to do the configuring necessary to record the MIDI to the Instrument Tracks, but otherwise you will have the Instrument Tracks route the generated audio to corresponding Audio Tracks which you will use to record the audio generated by the respective native or hosted virtual instruments. No matter which DAW application you decide to use, the first time you do this it will take a while, because there is a good bit of configuring that needs to be done. After you have done it a few times, it's easy; and you can make "template" DAW projects and NOTION scores that you can use to make initiating a new song easy, with the key being to open a "template" with all the configurations already done and then immediately doing a "Save As . . . " to create the foundation for a new song, which is what you do in the DAW application and the corresponding NOTION score . . . (7) The correct way to do this is to use NOTION 6 for composing the music notation and to use the DAW application for hosting the native and hosted virtual instruments. You can use a MIDI input device to record music notation in NOTION, but generally you want to do this separately, outside of a ReWire session. Otherwise, you can record MIDI in the DAW application, but again it typically will be one Instrument Track at a time. If you need to get the MIDI from one or more already recorded DAW Instrument Tracks into NOTION, then you can do an export of the MIDI from the DAW application and then import the just exported MIDI to a NOTION score. This is easier to do when you use Studio One Professional 4 and NOTION 6, because they have native import and export functionality that works nicely, which means that the two applications work cooperatively to do everything quickly and accurately with minimal user intervention . . . (8) I have an ongoing project that provides great detail on doing the "ReWire MIDI Strategy" with Studio One Professional 4 and NOTION 6, and it includes YouTube videos that show how to configure everything . . . Project: ReWire ~ NOTION + Studio One Professional (PreSonus NOTION Forum) And I have other posts and topics on ReWire, including a few posts on doing ReWire with earlier versions of Cubase, also in this forum . . . (9) For practical purposes, when a DAW application does not support ReWire MIDI fully, all you need to do is use NOTION 6 External MIDI staves with a virtual MIDI cable. One of the key differences is that with ReWire MIDI, you do not need a virtual MIDI cable, because the ReWire infrastructure creates the necessary pipes (UNIX terminology for a virtual MIDI cable in computer nerd language). As noted, I prefer the "ReWire MIDI Strategy", and it's the strategy I use now . . . SUMMARY There is a way to record a MIDI input device like a MIDI keyboard in a DAW application and in NOTION at the same time, but it's a bit cumbersome and basically is a hack . . . This is not the way to do it . . . The recommended ways are either (a) to record the MIDI in the DAW application and then to send it to the NOTION score or (b) outside of a ReWire session to record the MIDI to a staff in NOTION and then to send the resulting MIDI to the DAW application, where if you record to a regular NOTON staff that is configured with a native or NOTION-hosted virtual instrument will generate audio that you can hear, but I suppose you also can record MIDI to an External MIDI or ReWire MIDI staff, except that you will not hear anything, because neither of these types of NOTION staves produce audio . . . Unless there are some new features in Cubase, you can do what you need to do but not in the way you think you want to do it . . . This is a YouTube video I created a few years ago when NOTION 5 was the current version and I was using Digital Performer 8 (MOTU) as the ReWire host controller, with NOTION 5 and Reason 7 (Propellerhead Software) running as ReWire slaves, which for reference probably is something few people actually do; but I like to do experiments like this just to determine what all the software can do . . . [NOTE: The first part of the YouTube video shows NOTION 5 sending MIDI to Digital Performer 8, where the MIDI is recorded in Digital Performer 7, which as I recall also is playing some Reason 7 synthesizers. Recording MIDI sent from Digital Performer 8 to one External MIDI track in the NOTION score is shown in the second part of the YouTube video. You can get a sense of how complex this is, but once you do it a few times, it becomes easier. There's a lot of new stuff to learn, but it works when you know the rules and follow them exactly . . . ] This YouTube video shows a ReWire session with Cubase Elements 8 where Cubase is the ReWire host controller and both NOTION 6 and Reason 9 are ReWire slaves. The NOTION score has both External MIDI staves that are playing Reason synthesizers and regular staves that are playing virtual instruments hosted in NOTION. The audio generated by NOTION and Reason is sent to Cubase via ReWire channels, and Cubase Elements 8 handles playing the audio . . . [NOTE: There is no voiceover in this YouTube video, but so what. The purpose was to show that it works, not to explain how to configure everything . . . ] And this is a more recent YouTube video that shows how to send MIDI from Digital Performer 9 to play the native NOTION 6 piano by using Digital Performer 9 as a pseudo-MIDI input device, which definitely is a bit of a hack and is not documented anywhere . . . [NOTE: This works, because Digital Performer 9 allows NOTION 6 to share some of the transport control. If a DAW application does not share transport control, then this hack won't work . . . ] Lots of FUN!

Surf.Whammy's YouTube Channel

The Surf Whammys Sinkhorn's Dilemma: Every paradox has at least one non-trivial solution! |

|

Thanks for all these informations;

- Actually you're right, it seems like we can record only if the Midi port is seen by Notion 6, so with Cubase notion don't see any midi input from Cubase even rewire is active ... unfortunatelly, it's the big difference with the Studio One management between DAW and Notion 6 I think. But we can in the other direction have a complete score with several channels in Notion 6 and play instruments affected to rewire channels in Cubase without problem (Cubase pro 9.5.41 for me), And record this score in midi (Notion 6 playing and Cubase recording) it's already cool. To export a midi part from Cubase to Notion 6 ,obviously, there is always the workaround to export tracks to a midi file and open it in Notion. - You can affect your midi controller (example : a midi keyboard) both in Notion MIDI input and in Cubase of course and record simultaneously into the 2 softwares, that's avoid to export midi part from Cubase to Notion (but as you told it's one part by one part) |

cellierjerome wroteThanks for all these information; Audio and MIDI are separate . . . When the instruments are hosted in NOTION 6, you can send the generated audio to the DAW application via ReWire channel pairs, and there are 32 available channel pairs (1-2, 3-4, . . . 63-64) in NOTION for doing this, which does not require a virtual MIDI cable . . . This occurs when you use regular NOTION staves, which also can be VST Instrument and VST Preset staves, but here you are sending audio to the DAW application that is the ReWire host controller for the ReWire session . . . Sending MIDI from NOTION 6 to a DAW application is a different matter, and it's done on either (a) External MIDI staves or (b) ReWire MIDI staves . . . When you are using External MIDI staves, you need a virtual MIDI cable; but when you are using ReWire MIDI staves, you do not need a separate virtual MIDI cable, because the ReWire infrastructure provides the interapplication communication pipes . . . For reference, when you are using VSTi virtual instruments in NOTION 6, the music notation on the NOTION staves is converted to MIDI, and NOTION sends this MIDI to the VSTI virtual instruments to tell the VSTi virtual instrument engines what they need to do to generate the respective audio that the VSTi virtual instrument engines then send back to NOTION . . . Behind the scenes, NOTION 6 is a MIDI sequencer; but what you see on the various staves in NOTION is music notation, although there is the option to see "piano roll" representations of the MIDI that corresponds to the music notation . . . If you want to send MIDI from NOTION 6 to the DAW application, then you do this on either External MIDI staves or ReWire MIDI staves, and which one to use depends primarily on the particular MIDI technology the DAW application supports . . . Studio One Professional 4 supports receiving MIDI from External MID staves and from ReWire MIDI staves, with ReWire MIDI staves being the newest technology . . . You can send MIDI to NOTION 6, but it's limited to one staff at a time . . . As noted, it's possible that the current version of Cubase supports bidirectional MIDI to NOTION 6 ReWire MIDI staves, but I am not certain that NOTION 6 ReWire MIDI staves are bidirectional . . . Additionally, it's unlikely that pressing the Record button on the DAW application transport will cause NOTION 6 to begin recording simultaneously in a ReWire Session . . . When you send audio generated by NOTION 6 to Audio Tracks in the DAW application, this is separate and independent of anything involving MIDI. You only are sending NOTION generated audio via ReWire channel pairs to the DAW application, where the audio is received and played by the DAW application using stereo Audio Tracks mapped to the corresponding NOTION ReWire channel pairs . . . MIDI is different, and it is sent from NOTION 6 to the DAW application via either External MIDI staves or ReWire MIDI staves (if the DAW application fully supports ReWire MIDI), and both of these types of NOTION MIDI staves do not generate audio in NOTION, so there is no audio to send to the DAW application . . . MIDI staves in NOTION 6 are used to play DAW-native and DAW-hosted AUi virtual instruments (Mac-only) and VSTi virtual instruments (Mac and Windows) . . . Lots of FUN!

Last edited by Surf.Whammy on Mon Nov 12, 2018 10:53 am, edited 2 times in total.

Surf.Whammy's YouTube Channel

The Surf Whammys Sinkhorn's Dilemma: Every paradox has at least one non-trivial solution! |

|

Unfortunatelly I think the solution will not evolve concerning Cubase/Notion because Notion is linked now with Studio One and Cubase is linked now with Dorico, actually a good integration between Cubase and Dorico Element will be appear soon and I'll be in the obligation to leave Notion ... it's annoying because I like very much Notion but it's political between the brands

|

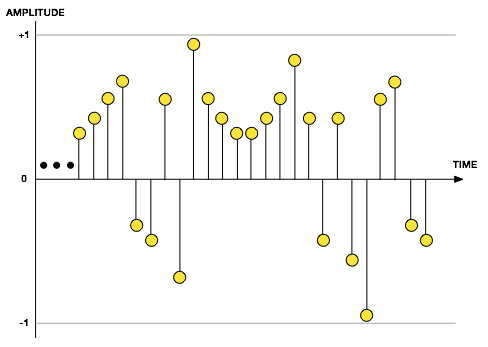

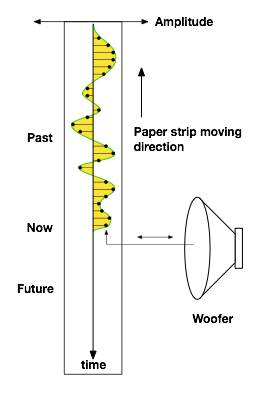

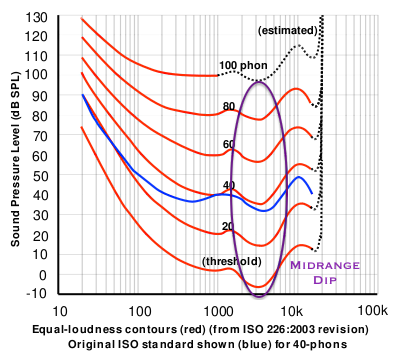

cellierjerome wroteThanks for all these informations; Yes, this is correct . . . Going back-and-forth from Studio One Professional 4 and NOTION 6 is considerably easier due to the unique interoperability that PreSonus added to Studio One and NOTION, which among other things avoids the need to do a separate export from Studio One and then a separate import of the MIDI file ("*.mid) to NOTION, which can be a bit awkward, since the import to NOTION creates a new score. To get the resulting music notation into the score you want, you have to copy and paste from the new score to corresponding new staves in the score where you want the MIDI, which is converted to music notation automagically or if not by a menu option . . . With the new PreSonus interoperability, you just tell Studio One to send the selected MIDI to NOTION and it happens automagically, which is very nice . . . You have the option to send the MIDI from Studio One to the current NOTION score, but you also can select to send it to a new NOTION score . . . If you use the "correct" names for the virtual instruments in Studio One and NOTION, then the transfer happens as you expect it should happen, but if you use custom names, then it works a bit differently, since neither application knows which native or virtual instruments to use . . . Nevertheless, both applications are good at determining how to do the mappings; and as I recall, if you have a staff in NOTION 6 assigned to a Kontakt (Native instruments) VSTI virtual instrument, this information is transferred to Studio One, which then creates the corresponding Instrument Track and populates it with the matching Kontakt VSTi virtual instrument . . . Or something like that . . . I have not done this type of transfer in a while, but my recollection is that I was surprised pleasantly with how much associated information was sent and received. It's a smart process, and since both applications are PreSonus products, all the internal information and mappings are available, so it really is automagical . . . THOUGHTS From the perspective of software engineering, it's certainly possible to add new features to Studio One and NOTION so that MIDI can be recorded in NOTION to multiple staves rather than to just one NOTION stave at a time . . . If ReWire MIDI technology is bidirectional, then this might not be so difficult to do; but even though I am a registered third-party developer for ReWire and Reason Rack Extensions, I don't know at present whether ReWire MIDI is bidirectional . . . Reason 9 does not support ReWire MIDI, even though ReWire is a Propellerhead Software technology and Reason is a Propellerhead Software produce . . . I have Reason 10, but I have not experimented with it to determine whether it supports ReWire MIDI like Studio One and NOTION do; but it might . . . For reference, Reason only works as a ReWire slave, which is fine with me; and it's the gold standard for verifying that an application correctly supports ReWire, either fully or partially . . . All applications that support ReWire must provide at least basic ReWire functionality, but there is a lot more to ReWire than the basic functionality . . . In this respect, PreSonus supports more of the advanced ReWire functionality, which is nice . . . Overall, I think the primary reason that NOTION has limits on what it supports is a matter of the amount of advanced software engineering that happens internally, which needs to be balanced at all times by the importance of being able to generate audio and MIDI in real-time on the fly rapidly without causing delays, skips, and so forth . . . On the screen you see music notation, but when you are using VSTi virtual instruments, the music notation you see on the screen is translated to MIDI in real-time and is sent to the VSTi virtual instrument engines to tell them what to do to create the audio that they send back to NOTION, which is what you hear; and this alone is a mind-boggling bit of software engineering that simply amazes me . . . I have a Computer Science degree and four decades of experience in Windows, Mac, and mainframe software designing and engineering; and I could design and program NOTION all by myself--in about 100 years . . . Being fair to myself, I did not do any music programming until recently, which was when I decided to design and program a Rack Extension for Reason; so if I did this all the time, maybe it would be easier and faster . . . The biggest hurdle was getting a sense of what happens when you work with what colloquially are called "lollipops"; and this took about two years to begin making sense . . . For reference, when you observe that a digitally sampled library of sounds is set to work at 44,100 samples per second with a standard bit-depth of perhaps 16-bits, the key information is that each sample is a "lollipop" . . . A "lollipop" has exactly two parameters, (a) when it arrives or occurs and (b) an amplitude, which is in the range from -1 to 0 to +1, and that's everything that can be known about a "lollipop" at the most basic level . . . Being a musician (guitar and bass, primarily), I have a very good sense of how notes and chords blend to create a song; and I can listen to songs and hear most of the parts individually, although hearing everything distinctly usually requires studying a recording for quite a while . . . Switch to the "Lollipop Universe", and what happens is that one second of audio--which can be a kick drum or an orchestra playing a Mozart symphony--is fully and accurately represented at standard CD audio quality by 44,100 "lollipops", each of which has exactly two, very simple parameters (arrival time and amplitude); and that's it . . . How do you make sense of that? I am making progress in discovering how to think about music in terms of "lollipops", but it's not so easy . . . For reference, the term "lollipop" derives from the fact that when graphed, a series of sample looks like a bunch of Tootsie Roll Pops . . . [NOTE: At standard CD quality audio, this set of "lollipops" is about six ten-thousandths of a second of audio, which could be six ten-thousandths of a second of kick drum or a Mozart symphony being played by a 128 piece orchestra accompanied by an equally populated choir . . . ]   Another way to get a sense of "lollipops" is to watch or touch the paper cone of a loudspeaker, since "lollipops" tell the loudspeaker cone how to move forward and backward . . .  Behind the scenes, internal to the code for Studio One and NOTION, the audio is manipulated, rendered, and played in "Lollipop Land", which to me is simply amazing . . . This is the case with all DAW applications, not just Studio One; and they all do "lollipops" internally . . . When you play a song on your iPhone or smartphone, it's "lollipops" . . . There is a lot more happening in the code for music applications than most folks imagine . . . This is a good way to put "Lollipop Land" into a more musical perspective . . . [NOTE: The x-axis shows the frequency, while the y-axis shows the amplitude or volume level. The x-axis is logarithmic, so the first third is frequencies from 0 to 100 cycles per second (or "Hertz [Hz]). The next third is 100-Hz to 1,000-Hz; and the next one is 1,000-Hz to 10,000-Hz. There is a fourth region at the far-right, and it's 10,000-Hz to 22,000-Hz, but there is not much happening there. From this you can infer that most of what happens in a song occurs in the first two regions starting at the left, where the leftmost region mostly is the deep bass of the kick drum, electric bass, and snare drum, along with some of the tom-toms. If Elvis or the Beatles were singing, then most of what they sing would be in the next region; and the higher frequencies mostly provide clarity and distinctiveness to the sounds, hence they also are important, as well. The audio above about 15,000 mostly is provided to entertain or annoy cats and dogs. The y-axis is more linear, except that volume is logarithmic {decibels); so in a roundabout way it's logarithmic, too. In this song, the lead guitar is real, but all the other instruments are virtual and are done with music notation in NOTION. Sometimes it's easier for me to play a lead guitar part than to try to do it with music notation, which in this instance mostly was a matter of doing the slow string-bends and slides (glissandi) . . . ] The overall shape of the frequency curve is easier to understand when you consider the "Equal Loudness Contour" that maps to the way humans perceive the various frequency ranges, where for example the perception of deep bass is not so good, while there is a specific part of the midrange which is perceived so readily that it requires more control to keep it from being overpowering . . . This also is the reason that you need to mix while listening to the music played through calibrated full-range studio monitors with a flat equal-loudness curve from 20-Hz to 20,000-Hz at 85 dB SPL measured with a dBA weighting, since otherwise you cannot trust what you hear and it will ruin a mix. It's also one of the primary reasons that mixing with headphones does not work for anything other than doing a tiny bit of fine-tuning for headphone listeners, with the equally important reason being that when you mix while listening with headphones, each ear hears something which is separate and independent, which does not occur when you listen to playback through studio monitors (unless you are doing a monaural mix, of course) . . .  [NOTE: The way this works is that, for example, to hear the low-pitch "E" string on Paul McCartney's Höfner Beatle Bass it needs to be at least 50 dB SPL, but to hear a note in the "Midrange Dip", it only needs to be -5 dB SPL, which is not much at all. The "flat" aspect of the equal loudness curve means that the studio monitor system is adjusted so that when graphed, the frequency response across the audio perception spectrum is flat (horizontal, if you prefer); and this indicates that, in the same example, the deep bass response of the studio monitor system is 50 dB SPL but the "Midrange Dip" response is -5 dB SPL. In other words, you use an equalizer--typically external--to adjust the response of the studio monitors to match the "Equal Loudness Contour" at 85 dB SPL measured with a dBA weighting and at 90 dB SPL measured with a dBC weighting. The result is a flat curve when measured, which makes sense when you think about it a while; and this ensures that what you hear is not biased, hence can be trusted. You need to be able to trust what you hear; and calibrating a full-range studio monitor system is the only way to achieve this goal, which in turn maps to needing a pair of deep bass subwoofers, since this is the only practical way to get the deep bass sufficiently loud to achieve a flat equal-loudness curve, especially if you want to push it down to 10-Hz, which is what I do here in the sound isolation studio, even though technically it is subsonic and is felt rather than heard . . . ] Equal Loudness Contour ~ Wikipedia [NOTE: I prefer a bit more deep bass, so I check the studio monitor calibration using both dBA and dBC weightings using a Nady DSM-1 Digital SPL Meter, which is affordable and reasonably accurate. 85 dB SPL is the goal, but if it goes to 90 dB SPL when I switch it to dBC weighting, this is fine, and it's within OSHA guidelines for listening up to 8 hours a day, more or less . . . ] A-weighting ~ Wikipedia This is a software utility done by MOTU, and it happens in real-time on the fly, so not only is the audio being played in this instance by Digital Performer (MOTU) but also the "lollipops" are being analyzed and separated by frequency and volume level, which is a lot of computing . . . This visualization is done by doing Fast Fourier Transforms on the stream of "lollipops", which separates the embedded information by frequencies and corresponding amplitudes or volume levels, and it's done with mathematical equations discovered by Joseph Fourier in 1822, nearly 200 years ago . . . There are significantly fewer "lollipops", perhaps by a factor of 100 or more . . . Just guessing, but if you pause the YouTube video, what you see might be what you hear in a few milliseconds or thereabouts of a stream of lollipops . . . At standard CD quality audio, there are 44.1 "lollipops" per millisecond, which is for any type of audio that humans with normal hearing can perceive . . . Kick drum or Mozart symphony? It doesn't matter, because both are fully and accurately represented by 44.1 "lollipops" per millisecond, except that "lollipops" are discrete, so there are no tenths of a "lollipop", which maps to lollipops being integer rather than real, mathematically, although the amplitude parameter of a "lollipop" is a real number between -1 and +1, but the arrival time is discrete and is integer. The "lollipop" arrives, and it's a complete entity at that moment in time. There are no fractional "lollipops" . . . Lots of FUN!

Surf.Whammy's YouTube Channel

The Surf Whammys Sinkhorn's Dilemma: Every paradox has at least one non-trivial solution! |

8 posts

Page 1 of 1

Who is online

Users browsing this forum: No registered users and 16 guests